Web applications are becoming popular, not at least because of Google’s massive effort to push everything through the browser (with Chrome OS being the most extreme example, where everything is running through a browser interface).

Before WebGL, the only way to create efficient graphics was through plug-ins, such as Adobe’s Flash, Microsoft’s Silverlight, Unity, or Google’s O3D and Native Client. But WebGL is a vendor independent technology, directly integrated with the browser’s JavaScript language and DOM model.

Unfortunately, WebGL browser support is limited. WebGL is not available in Internet Explorer on Windows, and is not enabled by default in Safari on Mac OS X. This means that roughly 50% of all internet users won’t have access to WebGL content. WebGL is not supported on iOS devices either (even though it is accessible for iAds, and can be enabled on jail-broken devices).

What is worse, is that Microsoft do not even plan to support WebGL, since they consider it a security threat. Their concerns are reasonable, but their solution is not: it would be much better if they simply showed a dialog box message, warning the user that executing WebGL provides a security risk, and giving a choice to continue or not – the same way they warn about plugins and downloaded executables.

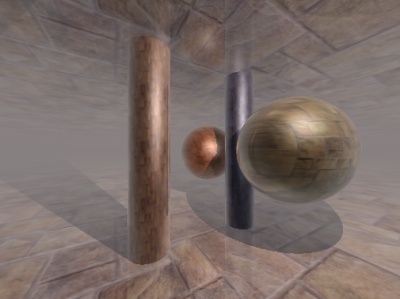

Some very impressive stuff has been done using WebGL, though: for instance ro.me, Path Tracing (Evan Wallace) , Cars (Altered Qualia), Terrain Editor (Rob Chadwick), Traveling Wavefronts (Felix Woitzel), Hartverdrahtet.

Using WebGL for Fractals

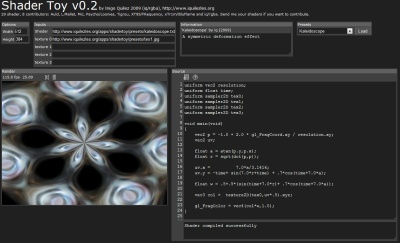

There are already some great tools available for experimenting with WebGL: ShaderToy, GLSLSandbox, WebGL Playground. Their main weakness is that it is difficult to store state information (for instance, if you want a movable camera), since this cannot be done in the shader itself, without using weird hacks. So, I decided to start out from scratch to get a feeling for WebGL.

WebGL (specification) is a JavaScript API based on OpenGL ES 2.0, a subset of the desktop OpenGL version designed for embedded devices such as cell phones.

Being a ‘modern’ OpenGL implementation, there is no support for fixed pipeline rendering: there is no matrix stack, no default shaders, no immediate mode rendering (you cannot use glBegin(…) – instead you must use vertex buffers). WebGL also misses some of more advanced features of the desktop OpenGL version, such as 3D textures, multiple render targets, and double precision support. And float texture support is an optional extension.

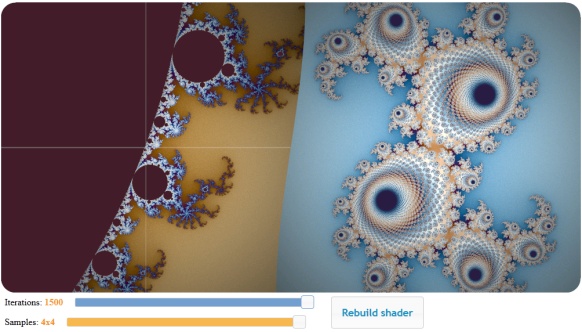

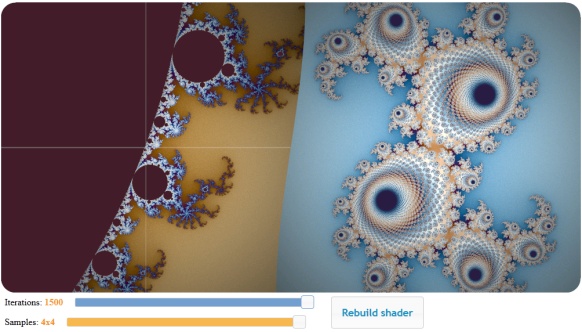

The first example I made was this Mandelbrot viewer: It demonstrates how to initialise WebGL and compile shaders, render a full-canvas quad, and process keyboard and mouse events and pass them through uniforms to the fragment shader.

Click the image to try out the WebGL demo.

A few programming comments. First JavaScript: I’m not very fond of JavaScript’s type system. The loose typing means that you risk finding bugs later, at run-time, instead of when compiling. It also means that it can be hard to read third-party code (which kind of parameters are you supposed to provide to a function like ‘update(ev, ui)’?). As for numerical types, JavaScript only has the Number type: an IEEE 754 double precision type – no integers!. Some browsers also silently ignore errors during run-time, which makes it even harder to find bugs. On the positive side is the quick iteration time, and the Firebug Firefox plugin, which is an extremely powerful tool for debugging web and JavaScript code.

As for the HTML, I still find it difficult to do table-less layout using floating div’s and css. I’m missing the flexible layout managers that many desktop UI kits provide, which makes it easy to align components and control how they scale when resized (but I may be biased towards desktop UI’s). Also, as HTML was not designed with UI widgets in mind, you have to use a third-party library to display a simple slider: I chose jQuery UI, which was easy to setup and use.

Finally the WebGL: The WebGL GLSL shader code is very similar to the desktop GLSL dialect. The biggest difference is the way loops are handled. Only ‘for’ loops are available, and with a very restricted syntax. It seems the iteration count must be determinable at compilation time (probably because some implementations unroll all loops), which means you no longer can use uniforms to control the loops (you can, however, ‘break’ out of loops dynamically based on run-time variables). This means, that in order to pass the iteration count and number of samples to the Mandelbrot shader, I have to do direct text substitutions in the shader code and recompile.

But my biggest frustation was caused by the ANGLE translation layer. Even for this very simple example, I had several issues with ANGLE – see the notes below.

Feel free to use the example as a starting point for further experiments – it is quite simple to modify the 2D shader code.

Notes about ANGLE

A problem with WebGL is poor graphics driver support for OpenGL. Chrome and Firefox have chosen a very radical approach to solve this: on Windows, they convert all WebGL GLSL shader code into DirectX 9 HLSL code through a converter called ANGLE. Their rationale for doing this, is that OpenGL 2.0 drivers are not available on all computers. However, several shaders won’t run due to the ANGLE translation, and the compilation time can be extremely slow. Wrt drivers, older machines with integrated graphics might be affected, but anything with a less than five year old Nvidia, AMD, or Intel HD graphics card should work with OpenGL 2.0.

In my experiments above, I ran into a bug that in some cases make loops with more than 255 iterations fail (I’ve submitted a bug report).

When debugging ANGLE problems, a good first step is to disable ANGLE and test the shaders. In Chrome, this can be done by starting the executable with the comand line argument –use-gl=desktop. You can check your ANGLE version with the URL chrome://gpu-internals/. In Firefox use the about:config URL, and webgl.force-enabled=true and webgl.prefer-native-gl=true to disable ANGLE.

It is also possible to get the translated HLSL code using the WEBGL_debug_shaders extension. However, this extension is only available for privileged code, which means Chrome must be started with the command line parameter –enable-privileged-webgl-extensions. After that the HLSL source can be obtained by calling:

var hlsl = gl.getExtension("WEBGL_debug_shaders").getTranslatedShaderSource(fragmentShader)

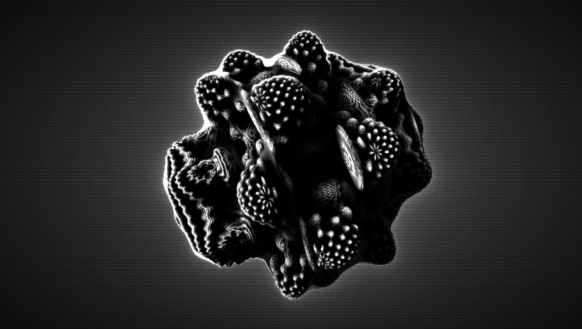

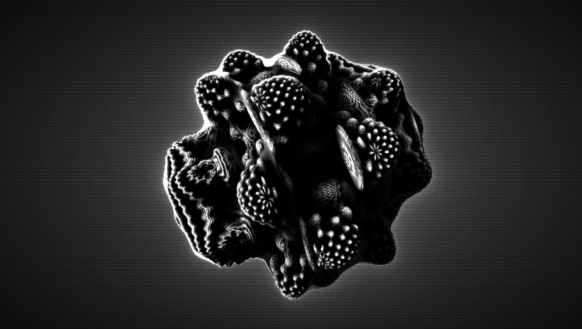

I still haven’t found an workaround for this earlier Mandelbulb experiment (using GLSLSandbox), which fails with another ANGLE bug:

Click the image to try out the WebGL demo (fails on ANGLE systems).

But, I’ll try implementing it from scratch to see if I can find the bug.