By making selected variables constant at compile time, some 3D fractals render more than four times faster. Support for easily locking variables has been added to Fragmentarium.

Some time ago, I became aware that the raytracer in Fragmentarium was somewhat slower than both Fractal Labs and Boxplorer for similar systems – this was somewhat puzzling since the DE raycasting technique is pretty much the same. After a bit of investigation, I realized that my standard raytracer had grown slower and slower, as new features had been added (e.g. reflections, hard shadows, and floor planes) – even if the features were turned off!

One way to speed up GLSL code, is by marking some variables constant at compile-time. This way the compiler may optimize code (e.g. unroll loops) and remove unused code (e.g. if hard shadows are disabled). The drawback is that changing these constant variables requires that the GLSL code is compiled again.

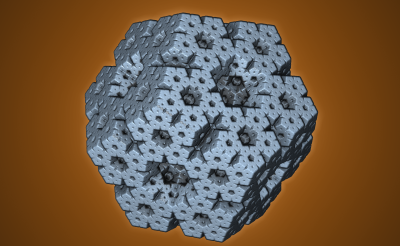

It turned out that this does have a great impact on some systems. For instance for the ‘Dodecahedron.frag’, take a look at the following render times:

No constants: 1.4 fps (1.0x)

Constant rotation matrices : 3.4 fps (2.4x)

Constant rotation matrices + Anti-alias + DetailAO: 5.6 fps (4.0x)

All 38 parameters (except camera): 6.1 fps (4.4x)

The fractal rotation matrices are the matrices used inside the DE-loop. Without the constant declarations, they must be calculated from scratch for each pixel, even though they are identical for all pixels. Doing the calculation at compile-time gives a notable speedup of 2.4x (notice that another approach would be to calculate such frame constants in the vertex shader and pass them to the pixel shader as ‘varying’ variables. But according to this post this is – surprisingly – not very effective).

The next speedup – from the ‘Anti-alias’ and ‘DetailAO’ variables – is more subtle. It is difficult to see from the code why these two variables should have such impact. And in fact, it turns out that combinations of other variables will amount in the same speedup. But these speedups are not additive! Even if you make all variables constants, the framerate only increases slightly above 5.6 fps. It is not clear why this happens, but I have a guess: it seems that when the complexity is lowered between a certain treshold, the shader code execution speed increases sharply. My guess is that for complex code, the shader runs out of free registers and needs to perform calculations using a slower kind of memory storage.

Interestingly, the ‘iterations’ variable offers no speedup – even though the compiler must be able to unroll the principal DE loop, there is no measurable improvement by doing it.

Finally, the compile time is also greatly reduced when making variables constant. For the ‘Dodecahedron.frag’ code, the compile time is ~2000ms with no constants. By making most variables constant, the compile time is lowered to around ~335ms on my system.

Locking in Fragmentarium.

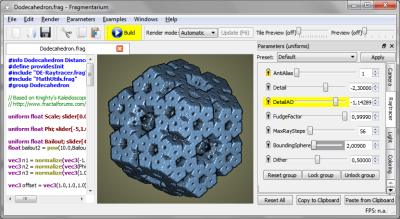

In Fragmentarium variables can be locked (made compile-time constant) by clicking the padlock next to them. Locked variables appear with a yellow padlock next to them. When a variable is locked, any changes to it will first be executed when the system is compiled (by pressing ‘build’). Locked variables, which have been changes, will appear with a yellow background until the system is compiled, and the changes are executed.

Notice, that whole parameter groups may be locked, by using the buttons at the bottom.

The ‘AntiAlias’ and ‘DetailAO’ variables are locked. The ‘DetailAO’ has been changed, but the changes are not executed yet (the yellow background). The ‘BoundingSphere’ variable has a grey background, because it has keyboard focus: its value can be finetuned using the arrow keys (up/down controls step size, left/right changes value).

In a fragment, a user variable can be marked as locked by default, by adding a ‘locked’ keyword to it:

uniform float Scale; slider[-5.00,2.0,4.00] Locked

Some variables can not be locked – e.g. the camera settings. It is possible to mark such variables by the ‘NotLockable’ keyword:

uniform vec3 Eye; slider[(-50,-50,-50),(0,0,-10),(50,50,50)] NotLockable

The same goes for presets. Here the locking mode can be stated, if it is different from the default locking mode:

#preset SomeName

AntiAlias = 1 NotLocked

Detail = -2.81064 Locked

Offset = 1,1,1

...

#endpreset

Locking will be part of Fragmentarium v0.9, which will be released soon.

Nice analysis.

I’ve recently been going through the same kind of process optimizing various GLSL functions (I am also currently obsessed with DE fractals!):

I’ve found harmless looking ‘if’ statements can also be a source of performance issues. Sometimes you can rewrite them to behave the same way without the condition:

If(a>0.0) b+=c*a;

Is equivalent to:

b+=c*max(a,0.0);

Likewise:

If(a<0.0) b+=c*a;

Is equivalent to:

b+=c*min(a,0.0);

Not sure why the compiler doesn't just do this itself behind the scenes.

Actually, I rewrote several Fragmentarium folds to that form last week! This was based on the post at: http://www.fractalforums.com/3d-fractal-generation/kaleidoscopic-%28escape-time-ifs%29/msg34976/#msg34976 which I’m now guessing was from you 🙂

Hi,

These articles are just awesome. Thank you very much.

About the effectiveness of using vertex shader for per frame parameters: In the post you referenced, I wasn’t accurate enought so I have to give more informations: I was using a slow graphics card (Gforce 8400) and the parameter was a matrix. Maybe the slowdown is related to the interpolators: I suspect there were too many varyings.

Quote: “But these speedups are not additive! Even if you make all variables constants, the framerate only increases slightly above 5.6 fps. It is not clear why this happens, but I have a guess: it seems that when the complexity is lowered between a certain treshold, the shader code execution speed increases sharply. My guess is that for complex code, the shader runs out of free registers and needs to perform calculations using a slower kind of memory storage.”

Here is another possible and partial explanation (If I understand correctly the litterature about CUDA and GPGPU): On modern GPUs, the driver launches many threads on each multiprocessor in order to hide latency (the number of cycles necessary for achieving an operation) and maximize throughput (the number of operations achieved per cycle). The latency on the G80 for example is IIRC 24 clock cycles and the throughput is 1/cycle/scalar_unit. moreover there is 8 scalar units per multiprocessor. In reality, the threads are grouped by “warps” of 32. So in order to maximize the throughput the driver have to launch up to 24*8=192 threads that will execute in parallel on each multiprocessor. Moreover, there is a register file of 8192 float dedicated for each multiprocessor. In the case where 192 threads are launched, 8192/192=42 registers are available per thread. Now if each thread uses N registers, floor(8192/(N*32)) warps (giving 32*floor(8192/(N*32)) threads) can be executed in parallel.

When the usage of registers is reduced in the shader, the driver can launch more threads which will help to hide latency and speeding speeding execution.

Flow control is also much faster whith constants (in reality no flow control is done) and uniforms (somehow no flow control is done also?) than with varyings and variables.

Anyway, GPUs are quite complex and their optimization is very tricky and a little bit over my head ;o)

Hi Knighty, some good points! I’ve few additions:

First, the 24 clock cycles you refer to, must be the read-after-write latency? I don’t think this is always being imposed: often you can reshuffle instructions, so that the next instruction does not depend on the previous one (for instance when using the vector types). But there are worse kinds of latency – for instance accessing global memory takes 400-600 cycles. I don’t know why my simple fractal renderers should access global memory – but perhaps when writing the resulting pixels to the buffer.

In my case, for the dodecahedron system, the glsl system uses 20 registers (found using ‘cgc test.glsl -oglsl -profile gp4fp’), which is lowered to 18-19 by making selected variables constant. But then the system should easily be able to keep at least 192 threads per mp… So there is still something strange about it 🙂

You really created several excellent points throughout ur article, “Optimizing GLSL Code | Syntopia”.

I may be returning to ur web-site eventually. With thanks -Evelyn

I actually was basically seeking for suggestions for my own blog and encountered ur post, “Optimizing

GLSL Code | Syntopia”, do you care if perhaps I work

with a number of your own suggestions? Thanks a lot -Joseph